Index

Haskell Communities and Activities Report

Eighteenth edition – May 2010

Janis Voigtländer (ed.)

Robin Adams

Krasimir Angelov

Heinrich Apfelmus

Jim Apple

Dmitry Astapov

Justin Bailey

Doug Beardsley

Jean-Philippe Bernardy

Tobias Bexelius

Edwin Brady

Gwern Branwen

Joachim Breitner

Roman Cheplyaka

Adam Chlipala

Olaf Chitil

Jan Christiansen

Alberto Gomez Corona

Duncan Coutts

Simon Cranshaw

Jacome Cunha

Nils Anders Danielsson

Larry Diehl

Atze Dijkstra

Facundo Dominguez

Jonas Duregard

Marc Fontaine

Patai Gergely

Brett G. Giles

Andy Gill

George Giorgidze

Dmitry Golubovsky

Carlos Gomez

Torsten Grust

Jurriaan Hage

Bastiaan Heeren

Claude Heiland-Allen

Jeroen Janssen

Florian Haftmann

David Himmelstrup

Guillaume Hoffmann

Martin Hofmann

Csaba Hruska

Paul Hudak

Jasper Van der Jeugt

Farid Karimipour

Oleg Kiselyov

Lennart Kolmodin

Michal Konecny

Lyle Kopnicky

Eric Kow

Sean Leather

Bas Lijnse

Andres Löh

Tom Lokhorst

Rita Loogen

Ian Lynagh

John MacFarlane

Christian Maeder

Jose Pedro Magalhães

Ketil Malde

Arie Middelkoop

Ivan Lazar Miljenovic

Neil Mitchell

Dino Morelli

Matthew Naylor

Jürgen Nicklisch-Franken

Rishiyur Nikhil

Thomas van Noort

Johan Nordlander

Miguel Pagano

Jens Petersen

Simon Peyton Jones

Jason Reich

Matthias Reisner

Stephen Roantree

Fred Ross

Alberto Ruiz

David Sabel

Antti Salonen

Ingo Sander

Uwe Schmidt

Martijn Schrage

Tom Schrijvers

Jeremy Shaw

Axel Simon

Michael Snoyman

Martijn van Steenbergen

Martin Sulzmann

Doaitse Swierstra

Henning Thielemann

Simon Thompson

Wren Ng Thornton

Jared Updike

Marcos Viera

Sebastiaan Visser

Janis Voigtländer

Jan Vornberger

Gregory D. Weber

Stefan Wehr

Mark Wotton

Kazu Yamamoto

Brent Yorgey

Preface

This is the 18th edition of the Haskell Communities and Activities Report.

Lots of interesting new stuff; web development has become a hot topic with much activity in particular.

As usual, fresh entries are formatted using a blue background, while updated entries have a header with a blue background.

I have continued the strategy, as in the last edition, of replacing with online pointers to previous versions those entries for which I received a liveness ping, but which have seen no essential update for a while.

Other entries on which no new activity has been reported for a year or longer have been dropped completely.

Please do revive such entries next time if you do have news on them.

A call for new entries and updates to existing ones will be issued on the usual mailing lists in October.

Now enjoy the current report and see what other Haskellers have been up to lately.

Any kind of feedback is of course very welcome. Specifically, I have been trying to improve the generation of the html version of the report, so any remarks on that output could be helpful.

There is no prize question this time. ☺

Janis Voigtländer, University of Bonn, Germany, <hcar at haskell.org>

1 Information Sources

There are plenty of academic papers about Haskell and plenty of

informative pages on the HaskellWiki. Unfortunately, there is not much

between the two extremes. That is where The Monad.Reader tries to fit

in: more formal than a Wiki page, but more casual than a journal

article.

There are plenty of interesting ideas that maybe do not warrant an

academic publication—but that does not mean these ideas are not

worth writing about! Communicating ideas to a wide audience is much

more important than concealing them in some esoteric journal. Even if

it has all been done before in the Journal of Impossibly Complicated

Theoretical Stuff, explaining a neat idea about “warm fuzzy things”

to the rest of us can still be plain fun.

The Monad.Reader is also a great place to write about a tool or

application that deserves more attention. Most programmers do not

enjoy writing manuals; writing a tutorial for The Monad.Reader,

however, is an excellent way to put your code in the limelight and

reach hundreds of potential users.

Since the last HCAR there has been one new issue, featuring articles

on space profiling, underappreciated monads, defining monads

operationally, and STM. The next issue will be published in May.

Further reading

http://themonadreader.wordpress.com/

The goal of the Haskell Wikibook project is to build a community textbook

about Haskell that is at once free (as in freedom and in beer), gentle, and

comprehensive. We think that the many marvelous ideas of lazy functional

programming can and thus should be accessible to everyone in a central

place. In particular, the Wikibook aims to answer all those conceptual questions that are frequently asked on the Haskell mailing lists.

Everyone including you, dear reader, are invited to contribute, be it by spotting mistakes and asking for clarifications or by ruthlessly rewriting existing material and penning new chapters.

Recent additions include a gentle introduction to generalized algebraic data types (GADTs).

Further reading

http://en.wikibooks.org/wiki/Haskell

1.3 Oleg’s Mini tutorials and assorted small projects

The collection of various Haskell mini tutorials and assorted

small projects

(http://okmij.org/ftp/Haskell/) has received three additions:

Optimal symbolic differentiation

We demonstrate symbolic differentiation of a wide class of numeric

functions without imposing any interpretive overhead. The functions

to differentiate can be given to us in separately compiled modules,

with no available source code. We produce a (compiled, if needed)

function that is an exact, algebraically simplified analytic

derivative of the given function. Our approach is an application of

normalization-by-evaluation. To avoid interpretive overhead, we rely

on Template Haskell (if the interpretive overhead is acceptable,

Template Haskell can be avoided).

Our approach also produces higher- and partial derivatives.

Currently we support algebraic functions and a bit of trigonometry.

http://okmij.org/ftp/Computation/Computation/Generative.html#diff-th

Logic programming in Haskell optimized for reasoning

We demonstrate an executable model of the evaluation of definite logic

programs, i.e., of resolving Horn clauses presented in the form of

definitional trees. Our implementation, DefinitionTree, is yet another

embedding of Prolog in Haskell. It is distinguished not by speed or

convenience. Rather, it is explicitly designed to formalize

evaluation strategies such as SLD and SLD-interleaving, to be easier

to reason about and so help prove termination and other properties of

the evaluation strategies. The main difference of DefinitionTree from

other embeddings of Prolog in Haskell is the absence of

name-generation effects. We need neither gensym nor the

state monad to ensure the unique naming of logic variables. Since

naming and evaluation are fully separated, the evaluation strategy is

no longer concerned with fresh name generation and so is easier to

reason about equationally. We have indeed used DefinitionTree

to prove basic properties of solution sets obtained by SLD or

SLD-resolution strategies.

http://okmij.org/ftp/Haskell/misc.html#reasoned-LP

Choosing a type-class instance based on the context

This mini-tutorial, written together with Simon Peyton-Jones, explains

how to overload operations based not on the type of an expression but

on the class to which an expression’s type belongs. For example, we want to

define an overloaded operation print to be equivalent to

(putStrLn . show) when applied to showable expressions,

whose types are the members of the class Show. For other

types, the operation print should do something

different (e.g., print that no show function is available, or, for

Typeable expressions, write their type instead). The problem is not

trivial because normally the type-checker selects an instance of the

type-class based only on the instance head. The instance constraints

are not taken into account during the selection process.

The trick is to re-write a constraint C a which succeeds or

fails, into a predicate constraint C’ a flag, which always succeeds,

but once discharged, unifies flag with a type-level

Boolean HTrue or HFalse.

http://okmij.org/ftp/Haskell/types.html#class-based-overloading

The “Haskell Cheat Sheet” covers the syntax, keywords, and other

language elements of Haskell 98. It is intended for beginning to

intermediate Haskell programmers and can even serve as a memory aid to

experts.

The cheat sheet is distributed as a PDF and literate source file.

Spanish and Japanese translations are also available.

Further reading

http://cheatsheet.codeslower.com

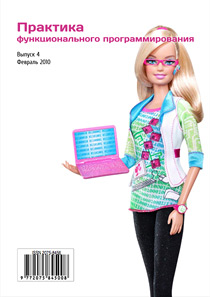

1.5 Practice of Functional Programming

“Practice of Functional Programing” is a Russian electronic magazine promoting functional programming. The magazine features articles that cover both theoretical and practical aspects of the craft. Most of the already published material is directly related to Haskell.

“Practice of Functional Programing” is a Russian electronic magazine promoting functional programming. The magazine features articles that cover both theoretical and practical aspects of the craft. Most of the already published material is directly related to Haskell.

The magazine attempts to keep a bi-monthly release schedule, with Issue #6 slated for release in June 2010.

Full contents of current and past issues are available in PDF from the official site of the magazine free of charge.

Articles are in Russian, with English annotations.

Further reading

http://fprog.ru/ for issues ##1–5

1.6 Cartesian Closed Comic

Cartesian Closed Comic, or CCC, is a webcomic about Haskell, the Haskell community,

and anything else related to Haskell. It is published irregularly. The comic is

sometimes inspired by “Quotes of the week” published in Haskell Weekly News. New

strips are posted to the Haskell reddit and Planet Haskell. The archives are also

available.

Further reading

http://ro-che.info/ccc/

2 Implementations

2.1 The Glasgow Haskell Compiler

In the past 6 months we have made the first 2 releases from the 6.12 branch. 6.12.1 came out in December, while 6.12.2 was released in April. The 6.12.2 release fixes many bugs relative to 6.12.1, including closing 81 trac tickets. For full release notes, and to download it, see the GHC webpage (http://www.haskell.org/ghc/download_ghc_6_12_2.html). We plan to do one more release from this branch before creating a new 6.14 stable branch.

GHC 6.12.2 will also be included in the upcoming Haskell Platform release (→5.2). The Haskell platform is the recommended way for end users to get a Haskell development environment.

Ongoing work

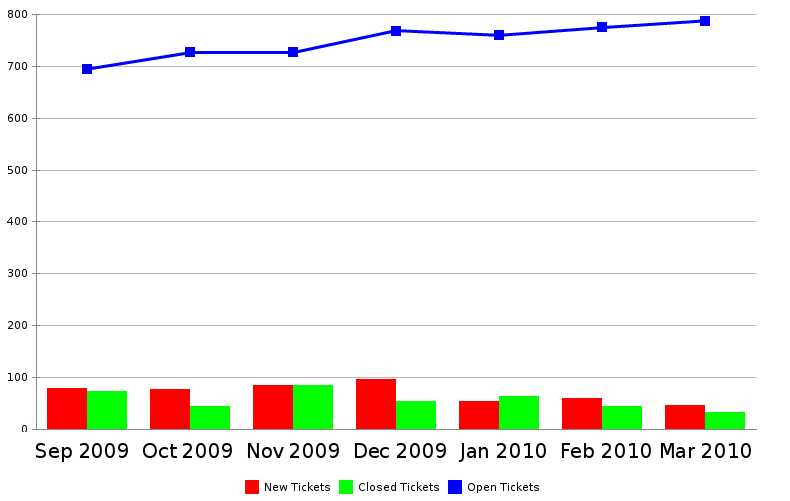

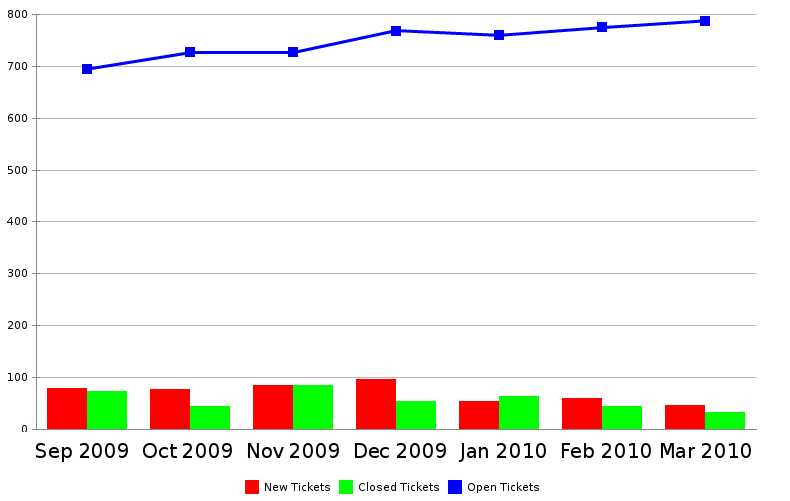

Meanwhile, in the HEAD, the last 6 months have seen more than 1000 patches pushed from more than a dozen contributors. As the following graph shows, tickets are still being opened faster than we can close them, with the total open tickets growing from around 700 to almost 800. We will be looking in the near future at improving the effectiveness of the way we use the bug tracker.

Language changes

We have made only a few small language improvements.

The most significant ones concern quasi-quotations, implementing

suggestions from Kathleen Fisher:

- Quasi-quotes can now appear as a top-level declaration, or in a type, as well

as in a pattern or expression.

- Quasi-quotes have a less noisy syntax (no “$”).

Here is an example that illustrates both:

f x = x+1

[pads| ...blah..blah... |]

The second declaration uses the quasi-quoter called

pads (which must

be in scope) to parse the “

...blah..blah..”, and return a list of

Template Haskell declarations, which are then spliced into the program

in place of the quote.

Type system

Type families remain the hottest bit of GHC’s type system. Simon

PJ has been advertising for some months that he intends to completely

rewrite the constraint solver, which forms the heart of the type

inference engine, and that remains the plan although he is being slow

about it. The existing constraint solver works surprisingly

well, but we have lots of tickets demonstrating bugs in corner cases.

An upcoming epic (70-page) JFP paper “Modular type inference with local assumptions”

brings together all the key ideas; watch Simon’s home page.

The mighty simplifier

One of GHC’s most crucial optimizers is the Simplifier, which is

responsible for many local transformations, plus applying inlining and

rewrite-rules. Over time it had become apparent that the

implementation of INLINE pragmas was not very robust: small changes in

the source code, or small wibbles in earlier optimizations, could mean

that something with an INLINE pragma was not inlined when it should be,

or vice versa.

Simon PJ therefore completely re-engineered the way INLINE pragmas are

handled:

Another important related change is this. Consider

{-# RULE "foo" flip (flop x) = <blah> #-}

test x = flip y ++ flip y

where

y = flop x

GHC will not fire rule “foo” because it is scared about duplicating the redex

(flop x). However, if you declare that

flop is CONLIKE, thus

{-# NOINLINE [1] CONLIKE flop #-}

this declares that an application of

flop is cheap enough that even a shared

application can participate in a rule application. The CONLIKE pragma is a modifier

on a NOINLINE (or INLINE) pragma, because it really only makes sense to match

flop on the LHS of a rule if you know that

flop is not going to be inlined

before the rule has a chance to fire.

The back end

GHC’s back end has been a ferment of activity. In particular,

-

David Terei made a LLVM back end for GHC (http://hackage.haskell.org/trac/ghc/wiki/Commentary/Compiler/Backends/LLVM). It is not part of the HEAD, but we earnestly hope that it will become so.

-

John Dias, Norman Ramsey, and Simon PJ made a lot of progress on Hoopl, our new representation for control flow graphs, and accompanying functions for dataflow analysis and transformation. There is a paper (Norman Ramsey, John Dias, and Simon Peyton Jones, Hoopl: A Modular, Reusable Library for Dataflow Analysis and Transformation, submitted to ICFP’10; http://research.microsoft.com/en-us/um/people/simonpj/papers/c/), and Hoopl itself is now a standalone, re-usable Cabal package, which makes it much easier for others to use.

The downside is that the code base is in a state of serious flux:

Runtime system work (SimonM)

There has been a lot of restructuring in the RTS over the past few months, particularly in the area of parallel execution. The biggest change is to the way “blackholes” work: these arise when one thread is evaluating a lazy computation (a “thunk”), and another thread or threads demands the value of the same thunk. Previously, all threads waiting for the result of a thunk were kept in a single global queue, which was traversed regularly. This led to two performance problems. Firstly, traversing the queue is O(n) in the number of blocked threads, and we recently encountered some benchmarks in which this was the bottleneck. Secondly, there could be a delay between completing a computation and waking up the threads that were blocked waiting for it. Fortunately, we found a design that solves both of these problems, while adding very little overhead.

We also fixed another pathological performance case: when a large numbers of threads are blocked on an MVar and become unreachable at the same time, reaping all these threads was an O(n2) operation. A new representation for the queue of threads blocked on an MVar solved this problem.

At the same time, we rearchitected large parts of the RTS to move from algorithms involving shared data structures and locking to a message-passing style. As things get more complex in the parallel RTS, using message-passing lets us simplify some of the invariants and move towards having less shared state between the CPUs, which will improve scaling in the long run.

The GC has seen some work too: the goal here is to enable each processor (“capability” in the internal terminology) to collect its private heap independently of the other processors. It turns out that this is quite tricky to achieve in the context of the current architecture, but we have made some progress in this direction by privatizing more of the global state and simplifying the GC data structures by removing the concept of “steps”, while keeping a simple aging policy, which is what steps gave us previously.

Data Parallel Haskell

In the last months, our focus has been on improving the scalability of the Quickhull benchmark, and this work is still ongoing. In addition, Roman has invested significant energy into the increasingly popular vector package and the NoSlow array benchmark framework. Package vector is our next-gen sequential array library, and we will replace the current sequential array component (dph-prim-seq) with package vector sometime in the next few months.

We completed a first release of the regular, multi-dimensional array library introduced in the previous status report. The library is called Repa and is available from Hackage (http://hackage.haskell.org/package/repa). The library supports shape-polymorphism and works with both the sequential and parallel DPH base library. We discuss the use and implementation of Repa in a draft paper (Gabriele Keller, Manuel M. T. Chakravarty, Roman Leshchinskiy, Simon Peyton Jones, and Ben Lippmeier: Regular, shape-polymorphic, parallel arrays in Haskell, submitted to ICFP’10; http://www.cse.unsw.edu.au/~chak/papers/KCLPL10.html). We have shown that Repa can produce efficient and scalable code for FFT and relaxation algorithms and would be very interested to hear from early adopters who are willing to try Repa out in an application they care about.

At the start of the year, Ben Lippmeier has joined the project. He has started to improve our benchmarks infrastructure and worked on Repa.

Other miscellaneous stuff

-

GHC makes heavy use of sets and finite maps. Up till now it has used

its own home-grown UniqFM and FiniteMap modules. Milan Straka (visiting as

an intern from the Czech Republic) has:

-

made GHC use the containers package instead, which happily makes

compilation go a few percent faster;

-

developed some improvements to containers that makes it go faster still.

So UniqFM and FiniteMap are finally dead. Hurrah for Hackage!

-

The Threadscope tool for visualizing parallel execution was released. The tool is ripe for improvement in many ways; if you are interested in helping, let us know.

Nightly builds

For some time, it has been clear to us that Buildbot is not the perfect tool for our nightly builds. The main problem is that it is very susceptible to network wibbles, which means that many of our builds fail due to a network issue mid-build. Also, any customization beyond that anticipated by the configuration options provided requires some messy python coding, poking around inside the buildbot classes. Additionally, we would like to implement a “validate-this” feature, where developers can request that a set of patches is validated on multiple platforms before being pushed. We could not see an easy way to do this with buildbot.

When the darcs.haskell.org hardware was upgraded, rather than installing buildbot on the new machine, we made the decision to implement a system that better matched our needs instead. The core implementation is now complete, and we have several machines using it for nightly builds.

We are always keen to add more build slaves; please see http://hackage.haskell.org/trac/ghc/wiki/Builder if you are interested. Likewise, patches for missing features are welcome! The (Haskell) code is available at http://darcs.haskell.org/builder/.

LHC is a backend for the Glorious Glasgow Haskell Compiler (→2.1), adding low-level, whole-program optimization to the system. It is based on Urban Boquist’s GRIN language, and using GHC as a frontend, we get most of its great extensions and features.

Essentially, LHC uses the GHC API to convert programs to external core format — it then parses the external core, and links all the necessary modules together into a whole program for optimization. We currently have our own base library (heavily and graciously taken from GHC). This base library is similar to GHC’s (module-names and all), and it is compiled by LHC into external core and the package is stored for when it is needed. This also means that if you can output GHC’s external core format, then you can use LHC as a backend.

The short-term goal is to make LHC faster, easier to use, and more complete in its coverage of Haskell 98.

Further reading

Helium is a compiler that supports a substantial subset of Haskell 98 (but, e.g.,

n+k patterns are missing). Type classes are restricted to a number of

built-in type classes and all instances are derived. The advantage of Helium is

that it generates novice friendly error feedback. The latest versions of the

Helium compiler are available for download from the new website located at

http://www.cs.uu.nl/wiki/Helium. This website also explains in detail

what Helium is about, what it offers, and what we plan to do in the near and far

future.

We are still working on making version 1.7 available, mainly a matter of updating

the documentation and testing the system. Internally little has changed, but

the interface to the system has been standardized, and the functionality of the

interpreters has been improved and made consistent. We have made new options

available (such as those that govern where programs are logged to). The use

of Helium from the interpreters is now governed by a configuration file, which

makes the use of Helium from the interpreters quite transparent for the programmer.

It is also possible to use different versions of Helium side by side

(motivated by the development of Neon (→5.3.2)).

A student has added parsing and static checking for type class and instance

definitions to the language, but type inferencing and code generating still

need to be added. The work on the documentation has progressed quite a bit,

but there has been little testing thus far, especially on a platform

such as Windows.

2.4 UHC, Utrecht Haskell Compiler

UHC, what is new?

UHC is the Utrecht Haskell Compiler, supporting almost all Haskell98 features and most of Haskell2010, plus

experimental extensions.

After the first release of UHC in spring 2009 we have been working on the next release, which we expect to have available this summer.

Although UHC did start its life as a compiler for research and experimentation,

much of the recent work has focussed on improving and stabilizing UHC for actual use.

The highlights of the next release will be:

- Support for building libraries with Cabal.

- A base library sufficient for Haskell98.

- Support for most of the Haskell2010 language features.

- A new garbage collector, replacing the Boehm GC we have been using until recently.

- More stable implementation of both compiler and runtime, with many bugfixes.

All of the above is already available for download from the UHC svn repository.

UHC, what do we currently do?

As part of the UHC project, the following (student) projects and other activities are underway (in arbitrary order):

- Jan Rochel: “Realising Optimal Sharing”, based on work by Vincent van Oostrum and Clemens Grabmayer.

- Tom Lokhorst: type based static analyses.

- Jeroen Leeuwestein: incrementalization of whole program analysis.

- Atze van der Ploeg: lazy closures.

- Paul van der Ende: garbage collection &LLVM.

- Arie Middelkoop (&Lucilia Camarão de Figueiredo): type system formalization and automatic generation from type rules.

- Jeroen Fokker: GRIN backend, whole program analysis.

- Calin Juravle: base libraries.

- Levin Fritz: base libraries for Java backend.

- Andres Löh: Cabal support.

- JoséPedro Magalhães: generic deriving.

- Doaitse Swierstra: parser combinator library.

- Atze Dijkstra: overall architecture, type system, bytecode interpreter backend, garbage collector.

Some of the projects are highlighted directly below.

Type based static analysis (Tom Lokhorst)

We are working on various static optimization transformations on top

of the recently introduced typed core intermediate language. A

particular focus is optimizing code based on the results of a type

based strictness analysis (Stefan Holdermans and Jurriaan Hage, Making “Stricterness” More Relevant, PEPM ’10). We are currently

investigating several approaches to optimizing higher order functions

that are polymorphic in their strictness properties.

Lazy closures (Atze van der Ploeg)

We are investigating cheaper ways to construct closures by re-using

information already present in frames (incarnation records). In this

scheme a frame may be used by a closure after the frame’s function has

ended so we put frames on the heap instead of the stack. If a frame’s

function has ended, the frame may contain more information than is

necessary for the closures that use it, the garbage collector needs to

be aware of this so that we do not save too much.

Garbage collection &LLVM (Paul van der Ende)

We want to extend the LLVM backend of UHC with accurate garbage

collection. The LLVM compiler is known to do various aggressive

transformations that might break static stack descriptors. We will

exploit the existing shadow-stack functionality of the LLVM framework

to connect it with the garbage collection library.

Generic deriving (JoséPedro Magalhães)

Recently we wanted to extend the deriving support in UHC to allow

deriving for other common type classes (such as Functor and

Typeable, for example). However, instead of hard-wiring particular

classes in the compiler, we decided to allow the user to specify how instances

should be derived for any type class, using simple generic programming

techniques. Currently we are working on implementing this new feature and

providing deriving support for a number of useful classes.

BackgroundUHC actually is a series of compilers of which the last is UHC, plus

infrastructure for facilitating experimentation and extension:

-

The implementation of UHC is organized as a series of

increasingly complex steps, and (independent of these steps) a set of aspects,

thus addressing the inherent complexity of a compiler.

Executable compilers can be generated from combinations of the above.

-

The description of the compiler uses code fragments which are

retrieved from the source code of the compilers, thus

keeping description and source code synchronized.

-

Most of the compiler is described by UUAG, the Utrecht University Attribute Grammar system (→4.1.1),

thus providing a more flexible means of tree programming.

For more information, see the references provided.

Further reading

2.5 Haskell front end for the Clean compiler

We are currently working on a front end for the Clean compiler (→3.2.4)

that supports a subset of Haskell 98. This will allow Clean modules to import

Haskell modules, and vice versa. Furthermore, we will be able to use some of

Clean’s features in Haskell code, and vice versa. For example, we could define

a Haskell module which uses Clean’s uniqueness typing, or a Clean module which

uses Haskell’s newtypes. The possibilities are endless!

Future plans

Although a beta version of the new Clean compiler is released last year to the

institution in Nijmegen, there is still a lot of work to do before we are able

to release it to the outside world. So we cannot make any promises regarding

the release date. Just keep an eye on the Clean mailing lists for any important

announcements!

Further reading

http://wiki.clean.cs.ru.nl/Mailing_lists

Over the past year, work on the Reduceron has continued, and we have

reached our goal of improving runtime performance by a factor of six!

This has been achieved through many small improvements, spanning

architectural, runtime, and compiler-level advances.

Two main by-products have emerged from the work. First, York

Lava, now available from Hackage, is the HDL we use. It is very

similar to Chalmers Lava, but supports a greater variety of primitive

components, behavioral description, number-parameterized types, and a

first attempt at a Lava prelude. Second, F-lite is our subset

of Haskell, with its own lightweight toolset.

There remain some avenues for exploration. We have taken a step

towards parallel reduction in the form of speculative evaluation

of primitive redexes, but have not yet attempted the Reducera

— multiple Reducerons running in parallel. And recently, Jason has

been continuing his work on the F-lite supercompiler (→4.1.4), which is now

producing some really nice results.

Alas, the time to take stock and publish a full account of what we

have already done is rapidly approaching!

Further reading

2.7 Platforms

2.7.1 Haskell in Gentoo Linux

Gentoo Linux currently officially supports GHC 6.10.4, including the

latest Haskell Platform (→5.2) for x86, amd64, sparc,

and ppc64. For previous GHC versions we also have binaries available

for alpha, hppa and ia64.

The full list of packages available through the official repository

can be viewed at

http://packages.gentoo.org/category/dev-haskell?full_cat.

The GHC architecture/version matrix is available at

http://packages.gentoo.org/package/dev-lang/ghc.

Please report problems in the normal Gentoo bug tracker

at bugs.gentoo.org.

We have also recently started an official Gentoo Haskell blog where we

can communicate with our users what we are doing

http://gentoohaskell.wordpress.com/.

There is also an overlay which contains more than 300 extra unofficial

and testing packages. Thanks to the Haskell developers using Cabal and

Hackage (→5.1), we have been able to write a tool called

“hackport” (initiated by Henning Günther) to generate Gentoo

packages with minimal user intervention. Notable packages in the

overlay include the latest version of the Haskell Platform as well as

the latest 6.12.2 release of GHC, as well as popular Haskell packages

such as pandoc (→6.4.1) and gitit (→6.3.3).

More information about the Gentoo Haskell Overlay can be found at

http://haskell.org/haskellwiki/Gentoo. Using Darcs (→6.1.1),

it is easy to keep up to date, to submit new packages, and to fix any

problems in existing packages. It is also available via the Gentoo

overlay manager “layman”. If you choose to use the overlay, then

any problems should be reported on IRC (#gentoo-haskell on

freenode), where we coordinate development, or via email

<haskell at gentoo.org> (as we have more people with the ability to

fix the overlay packages that are contactable in the IRC channel than

via the bug tracker).

Through recent efforts we have developed a tool called

“haskell-updater”

http://www.haskell.org/haskellwiki/Gentoo#haskell-updater

(initiated by Ivan Lazar Miljenovic). This is a replacement of the

old ghc-updater script for rebuilding packages when a new

version of GHC is installed which is now not only written in Haskell

but will also rebuild broken packages. “haskell-updater” is still

in active development to further refine and add to its features and

capabilities.

As always we are more than happy for (and in fact encourage) Gentoo

users to get involved and help us maintain our tools and packages,

even if it is as simple as reporting packages that do not always work

or need updating: with such a wide range of GHC and package versions

to co-ordinate, it is hard to keep up! Please contact us on IRC or

email if you are interested!

The Fedora Haskell SIG is an effort to provide good support for Haskell in Fedora.

We have been updating packages for Fedora 13 due to ship soon with ghc-6.12.1 with shared libraries enabled, and haskell-platform-2010.1.0.0: special thanks to Rakesh Pandit for reviewing 11 new packages formerly in ghc extralibs. Darcs is updated to 2.4 (thanks for Conrad Meyer and Lorenzo Villani for reviewing new dependent packages). New packaging macros in ghc-rpm-macros have removed nearly all the remaining tedium of packaging libraries for Fedora with simpler .spec file templates in the Fedora cabal2spec package.

Fedora 14 changes will probably be more modest: likely 6.12.2 and more libraries and programs from hackage.

Contributions to Fedora Haskell are welcome: join us on #fedora-haskell on Freenode IRC.

Further reading

3 Language

3.1 Extensions of Haskell

Eden extends Haskell with a small set of syntactic constructs for

explicit process specification and creation. While providing

enough control to implement parallel algorithms efficiently, it

frees the programmer from the tedious task of managing low-level

details by introducing automatic communication (via head-strict

lazy lists), synchronization, and process handling.

Eden’s main constructs are process abstractions and process

instantiations. The function process :: (a -> b) ->

Process a b embeds a function of type (a -> b) into a

process abstraction of type Process a b which,

when instantiated, will be executed in parallel. Process

instantiation is expressed by the predefined infix operator

( # ) :: Process a b -> a -> b. Higher-level

coordination is achieved by defining skeletons, ranging

from a simple parallel map to sophisticated replicated-worker

schemes. They have been used to parallelize a set of non-trivial

benchmark programs.

Survey and standard reference

Rita Loogen, Yolanda Ortega-Mallén, and Ricardo Peña:

Parallel Functional Programming in Eden, Journal of

Functional Programming 15(3), 2005, pages 431–475.

Implementation

The parallel Eden runtime environment for GHC

6.8.3 is available from the Marburg group on request.

The Eden extension of GHC 6.12 will soon be released.

Support for Glasgow parallel Haskell (GpH, http://haskell.org/communities/11-2008/html/report.html#sect3.1.2) is currently being added to

this version of the runtime environment. It is planned for the

future to maintain a common parallel runtime environment for Eden,

GpH, and other parallel Haskells. A first parallel Haskell Hackathon has

taken place in St Andrews from December 10th till 12th, 2009.

It has been a lively event triggering various activities to

develop the common parallel runtime environment further.

Parallel program executions can be

visualized using the Eden trace viewer tool EdenTV. Recent results

show that the Eden system behaves equally well on workstation clusters

and on multi-core machines.

Recent and Forthcoming Publications

- Oleg Lobachev and Rita Loogen:

Estimating Parallel Performance, a Skeleton-based Approach,

Technical Report No 2010–2, Department of Mathematics and Computer Science,

Philipps-Universitat Marburg, 2010.

- Mischa Dieterle, Thomas Horstmeyer, Rita Loogen:

Skeleton Composition Using Remote Data, in:

Practical Aspects of Declarative Programming 2010 (PADL’10),

LNCS 6009, Springer 2010, 337–353.

- Thomas Horstmeyer, Rita Loogen:

Grace — Graph-based Communication in Eden,

Trends in Functional Programming, Volume 10, Intellect 2010, 1–16.

- Mustafa Aswad, Phil Trinder, Abdallah Al Zain, Greg Michaelson, Jost Berthold:

Low Pain vs No Pain Multi-core Haskells, Trends in Functional Programming,

Volume 10, Intellect 2010, 49–64.

- Lidia Sanchez-Gil, Mercedes Hidalgo-Herrero, Yolanda Ortega-Mallen:

An Operational Semantics for Distributed Lazy Evaluation,

Trends in Functional Programming, Volume 10, Intellect 2010, 65–80.

- Mercedes Hidalgo-Herrero, Yolanda Ortega-Mallen:

To be or not to be... Lazy (in a Parallel Context),

Electronic Notes in Theoretical Computer Science (ENTCS) VOL.

258, Elsevier, 2009.

Further reading

http://www.mathematik.uni-marburg.de/~eden

XHaskell is an extension of Haskell

which combines parametric polymorphism, algebraic data types, and type

classes with XDuce style regular expression types, subtyping, and

regular expression pattern matching.

The latest version can be downloaded via

http://code.google.com/p/xhaskell/

Latest developments

The latest version of the library-based regular expression pattern matching component is available

via the google code web site. We are currently working on a paper

describing the key ideas of the approach.

The focus of the HaskellActor project is on Erlang-style concurrency abstractions.

See for details:

http://sulzmann.blogspot.com/2008/10/actors-with-multi-headed-receive.html.

Novel features of HaskellActor include

- Multi-headed receive clauses, with support for

- guards, and

- propagation

The HaskellActor implementation (as a library extension to Haskell) is available

via

http://hackage.haskell.org/cgi-bin/hackage-scripts/package/actor.

The implementation is stable, but there is plenty of room for optimizations and extensions (e.g. regular expressions in patterns). If this sounds interesting to anybody (students!), please contact me.

Latest developments

We are currently working towards a distributed version of Haskell actor following the approach

of Frank Huch, Ulrich Norbisrath: Distributed Programming in Haskell with Ports, IFL’00.

HaskellJoin is a (library) extension of Haskell to support join patterns. Novelties are

- guards and propagation in join patterns,

- efficient parallel execution model which exploits multiple processor cores.

Latest developments

In this honors thesis, Olivier Pernet (a student of Susan Eisenbach) provides a nicer monadic interface to

the HaskellJoin library.

Further reading

http://sulzmann.blogspot.com/2008/12/parallel-join-patterns-with-guards-and.html

3.2 Related Languages

Curry is a functional logic programming language with Haskell

syntax.

In addition to the standard features of functional programming like

higher-order functions and lazy evaluation, Curry supports features

known from logic programming.

This includes programming with non-determinism, free variables,

constraints, declarative concurrency, and the search for solutions.

Although Haskell and Curry share the same syntax, there is one main

difference with respect to how function declarations are

interpreted.

In Haskell the order in which different rules are given in the source

program has an effect on their meaning.

In Curry, in contrast, the rules are interpreted as equations,

and overlapping rules induce a non-deterministic choice and a

search over the resulting alternatives.

Furthermore, Curry allows to call functions with free variables

as arguments so that they are bound to those values

that are demanded for evaluation, thus providing for

function inversion.

There are three major implementations of Curry.

While the original implementation PAKCS (Portland Aachen Kiel Curry

System) compiles to Prolog, MCC (Münster Curry Compiler) generates

native code via a standard C compiler.

The Kiel Curry System (KiCS) compiles Curry to Haskell aiming to

provide nearly as good performance for the purely functional part as

modern compilers for Haskell do.

From these implementations only MCC will provide type classes in the

near future.

Type classes are not part of the current definition of Curry, though

there is no conceptual conflict with the logic extensions.

Recently, new compilation schemes for translating Curry to Haskell

have been developed that promise significant speedups compared to both

the former KiCS implementation and other existing implementations of

Curry.

There have been research activities in the area of functional logic

programming languages for more than a decade.

Nevertheless, there are still a lot of interesting research topics

regarding more efficient compilation techniques and even semantic

questions in the area of language extensions like encapsulation and

function patterns.

Besides activities regarding the language itself, there is also an

active development of tools concerning Curry (e.g., the documentation

tool CurryDoc, the analysis environment CurryBrowser, the observation

debuggers COOSy and iCODE, the debugger B.I.O. (http://www-ps.informatik.uni-kiel.de/currywiki/tools/oracle_debugger), EasyCheck (http://haskell.org/communities/05-2009/html/report.html#sect4.3.2), and CyCoTest).

Because Curry has a functional subset, these tools can canonically be

transferred to the functional world.

Further reading

Agda is a dependently typed functional programming language (developed

using Haskell). A central feature of Agda is inductive families, i.e.

GADTs which can be indexed by values and not just types. The

language also supports coinductive types, parameterized modules, and

mixfix operators, and comes with an interactive interface—the

type checker can assist you in the development of your code.

A lot of work remains in order for Agda to become a full-fledged

programming language (good libraries, mature compilers, documentation,

etc.), but already in its current state it can provide lots of fun as

a platform for experiments in dependently typed programming.

New since last time:

- Version 2.2.6 has been released, with experimental support for

universe polymorphism.

- FreeBSD users can now install Agda using FreshPorts.

Further reading

The Agda Wiki: http://wiki.portal.chalmers.se/agda/

Idris is an experimental language with full dependent types.

Dependent types allow types to be predicated on values, meaning that

some aspects of a program’s behavior can be specified precisely in

the type. The language is closely related to Epigram and Agda (→3.2.2).

It is available from http://www.idris-lang.org, and there is a

tutorial at http://www.cs.st-andrews.ac.uk/~eb/Idris/tutorial.html.

Idris aims to provide a platform for realistic programming with

dependent types. By realistic, we mean the ability to interact with

the outside world and use primitive types and operations, to make a

dependently typed language suitable for systems programming. This

includes networking, file handling, concurrency, etc.

Idris emphasizes programming over theorem proving, but nevertheless

integrates with an interactive theorem prover. It is compiled, via C,

and uses the Boehm-Demers-Weiser garbage collector.

One goal of the project is to show that Idris, and dependently typed

programming in general, can be efficient enough for the development of

real world verified software. To this end, Idris is currently being used

to develop a library for verified network protocol implementation,

with example applications.

Further reading

http://www.idris-lang.org/

Clean is a general purpose, state-of-the-art, pure and lazy functional

programming language designed for making real-world applications. Clean is the

only functional language in the world which offers uniqueness typing.

This type system makes it possible in a pure functional language to incorporate

destructive updates of arbitrary data structures (including arrays) and to make

direct interfaces to the outside imperative world.

Here is a short list of notable features:

- Clean is a lazy, pure, and higher-order functional programming language with

explicit graph-rewriting semantics.

- Although Clean is by default a lazy language, one can smoothly turn it into

a strict language to obtain optimal time/space behavior: functions can be

defined lazy as well as (partially) strict in their arguments; any (recursive)

data structure can be defined lazy as well as (partially) strict in any of its

arguments.

- Clean is a strongly typed language based on an extension of the well-known

Milner/Hindley/Mycroft type inferencing/checking scheme including the common

higher-order types, polymorphic types, abstract types, algebraic types, type

synonyms, and existentially quantified types.

- The uniqueness type system in Clean makes it possible to develop efficient

applications. In particular, it allows a refined control over the single

threaded use of objects which can influence the time and space behavior of

programs. The uniqueness type system can be also used to incorporate destructive

updates of objects within a pure functional framework. It allows destructive

transformation of state information and enables efficient interfacing to the

non-functional world (to C but also to I/O systems like X-Windows) offering

direct access to file systems and operating systems.

- The Clean type system supports dynamic types, allowing values of arbitrary types to be

wrapped in a uniform package and unwrapped via a type annotation at run-time. Using dynamics,

code and data can be exchanged between Clean applications in a flexible and type-safe way.

- Clean supports type classes and type constructor classes to make overloaded

use of functions and operators possible.

- Clean offers records and (destructively updateable) arrays and files.

- Clean has pattern matching, guards, list comprehensions, array

comprehensions and a lay-out sensitive mode.

- Clean offers a sophisticated I/O library with which window based

interactive applications (and the handling of menus, dialogs, windows, mouse,

keyboard, timers, and events raised by sub-applications) can be specified

compactly and elegantly on a very high level of abstraction.

- There is a Clean IDE and there are many libraries available offering

additional functionality.

Future plans

Please see the entry on a Haskell frontend for the Clean

compiler (→2.5) for the future plans.

Further reading

Timber is a general programming language derived from Haskell, with the specific aim of supporting development of complex event-driven systems. It allows programs to be conveniently structured in terms of objects and reactions, and the real-time behavior of reactions can furthermore be precisely controlled via platform-independent timing constraints. This property makes Timber particularly suited to both the specification and the implementation of real-time embedded systems.

Timber shares most of Haskell’s syntax but introduces new primitive constructs for defining classes of reactive objects and their methods. These constructs live in the Cmd monad, which is a replacement of Haskell’s top-level monad offering mutable encapsulated state, implicit concurrency with automatic mutual exclusion, synchronous as well as asynchronous communication, and deadline-based scheduling. In addition, the Timber type system supports nominal subtyping between records as well as datatypes, in the style of its precursor O’Haskell.

A particularly notable difference between Haskell and Timber is that Timber uses a strict evaluation order. This choice has primarily been motivated by a desire to facilitate more predictable execution times, but it also brings Timber closer to the efficiency of traditional execution models. Still, Timber retains the purely functional characteristic of Haskell, and also supports construction of recursive structures of arbitrary type in a declarative way.

The latest release of the Timber compiler system is v 1.0.3 and dates back to May 2009. More recent developments are available in the on-line source code repository, including a new way of organizing and accessing external interfaces, a simplified command syntax, and many bug fixes. A proper release of this version is in the making and will be announced before summer 2010.

The new view of external interfaces separates access to OS, hardware or library services from the definition of a particular run-time system. This move greatly simplifies the construction of both external bindings and cross-compilation targets, which is utilized in on-going development of Xlib, OpenGL, iPhone as well as ARM7 support. Some of these targets will be part of the upcoming release, while others are scheduled for a follow-up at the end of the year. This later release will also contain a newly developed back-end targeting Javascript and HTML5, with the purpose of making Timber applicable to web programming in a reactive and strongly typed fashion.

Other active projects include interfacing the compiler to memory and execution-time analysis tools, extending it with a supercompilation pass, and taking a fundamental grip on the generation of type error messages. The latter work will be based on principles developed for the Helium compiler (→2.3).

Further reading

http:://timber-lang.org

Ur/Web is a domain-specific language for building modern web applications. It is built on top of the Ur language as a custom standard library with special compiler support. Ur draws inspiration from a number of sources in the world of statically-typed functional programming. From Haskell, Ur takes purity, type classes, and monadic IO. From ML, Ur takes eagerness and a module system with functors and type abstraction. From the world of dependently-typed programming, Ur takes a rich notion of type-level computation.

The Ur/Web extensions support the core features of today’s web applications: “Web 1.0” programming with links and forms, “Web 2.0” programming with non-trivial client-side code, and interaction with SQL database backends. Considering programmer productivity, security, and scalability, Ur/Web has significant advantages over the mainstream web frameworks. Novel facilities for statically-typed metaprogramming enable new styles of abstraction and modularity. The type system guarantees that all kinds of code interpretable by browsers or database servers are treated as richly-typed syntax trees (along the lines of familiar examples of GADTs), rather than as “strings”, thwarting code injection attacks. The whole-program optimizing compiler generates fast native code which does not need garbage collection.

The open source toolset is in beta release now and should be usable for real projects. I expect the core feature set to change little in the near future, and the next few releases will probably focus on bug fixes and browser compatibility.

Further reading

http://www.impredicative.com/ur/

4 Tools

4.1 Transforming and Generating

UUAG is the Utrecht University Attribute Grammar system. It is a preprocessor for Haskell which makes it easy to write catamorphisms (i.e., functions that do to any datatype what foldr does to lists). You define tree walks using the intuitive concepts of inherited and synthesized attributes, while keeping the full expressive power of Haskell. The generated tree walks are efficient in both space and time.

An AG program is a collection of rules, which are pure Haskell functions between attributes.

Idiomatic tree computations are neatly expressed in terms of copy, default, and collection rules. Attributes themselves can masquerade as subtrees and be analyzed accordingly (higher-order attribute). The order in which to visit the tree is derived automatically from the attribute computations. The tree walk is a single traversal from the perspective of the programmer.

Nonterminals (data types), productions (data constructors), attributes, and rules for attributes can be specified separately, and are woven and ordered automatically. Recently, we enhanced these aspect-oriented programming features. It is now possible to add rules that transform a value of a synthesized attribute, or to transform a child and its inherited and synthesized attributes.

The system is in use by a variety of large and small projects, such as the Utrecht Haskell Compiler UHC (→2.4), the editor Proxima for structured documents (→6.4.5), the Helium compiler (→2.3), the Generic Haskell compiler, UUAG itself, and many master student projects. The current version is 0.9.19 (April 2010), is extensively tested, and is available on Hackage.

We are working on the following enhancements of the UUAG system, building on attribute grammar research of the past in a modern setting:

-

Parallel evaluation

We aim to evaluate attributes in parallel to take advantage of current multi-core processors.

The static dependencies between attributes allow us to identify

parts that are independent and schedule them simultaneously in a safe and efficient way.

-

Incremental evaluation

We generate code that adapts incrementally to changes in the input data. The goal is to reuse

the outcome of previous computations, by recomputing only those parts of the computation that

are affected by the changes.

-

Fixpoint evaluation

When static dependencies between attributes are circular, results can still be computed through

lazy evaluation when the dynamic dependencies are not. We are now incorporating a fixed-point

evaluation scheme that computes a result even in case of a dynamic cycle.

Furthermore, we investigate extensions of the AG formalism to make AGs more suitable to express

type inferencing. We made prototype implementations that facilitate

the dynamic construction of inference trees, to use AGs for bidirectional

type rules and constraint solving.

Further reading

AspectAG is a library of strongly typed Attribute Grammars implemented using type-level programming.

Introduction

Attribute Grammars (AGs), a general-purpose formalism for describing recursive computations over data types,

avoid the trade-off which arises when building software incrementally:

should it be easy to add new data types and data type alternatives or to add new operations on existing data types?

However, AGs are usually implemented as a pre-processor,

leaving e.g. type checking to later processing phases and making interactive development,

proper error reporting and debugging difficult.

Embedding AG into Haskell as a combinator library solves these problems.

Previous attempts at embedding AGs as a domain-specific language were based on extensible records and thus exploiting

Haskell’s type system to check the well-formedness of the AG,

but fell short in compactness and the possibility to abstract over oft occurring AG patterns.

Other attempts used a very generic mapping for which the AG well-formedness could not be statically checked.

We present a typed embedding of AG in Haskell satisfying all these requirements.

The key lies in using HList-like typed heterogeneous collections (extensible polymorphic records)

and expressing AG well-formedness conditions as type-level predicates (i.e., typeclass constraints).

By further type-level programming we can also express common programming patterns,

corresponding to the typical use cases of monads such as Reader, Writer, and State.

The paper presents a realistic example of type-class-based type-level programming in Haskell.

Background

The approach taken in AspectAG was proposed by Marcos Viera,

Doaitse Swierstra, and Wouter Swierstra in the

ICFP 2009 paper

“Attribute Grammars Fly First-Class: How to do aspect oriented programming in Haskell”.

Further reading

http://www.cs.uu.nl/wiki/bin/view/Center/AspectAG

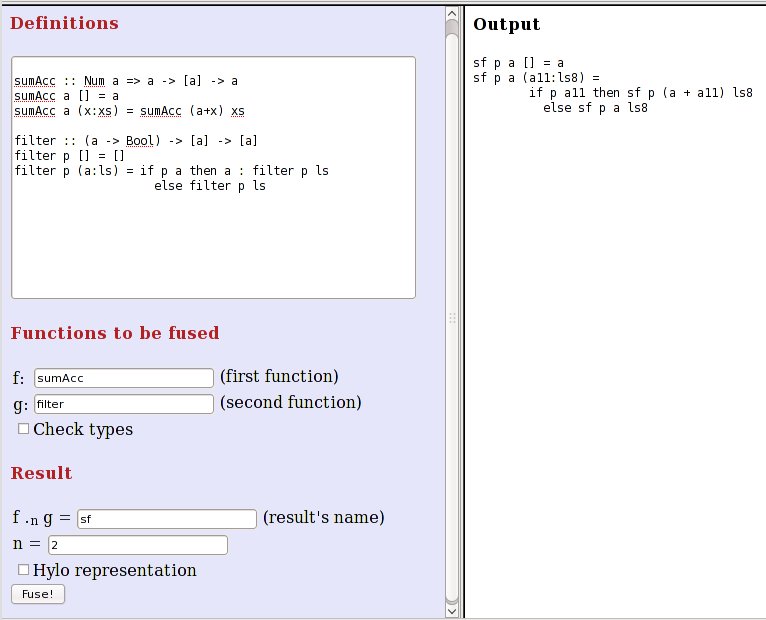

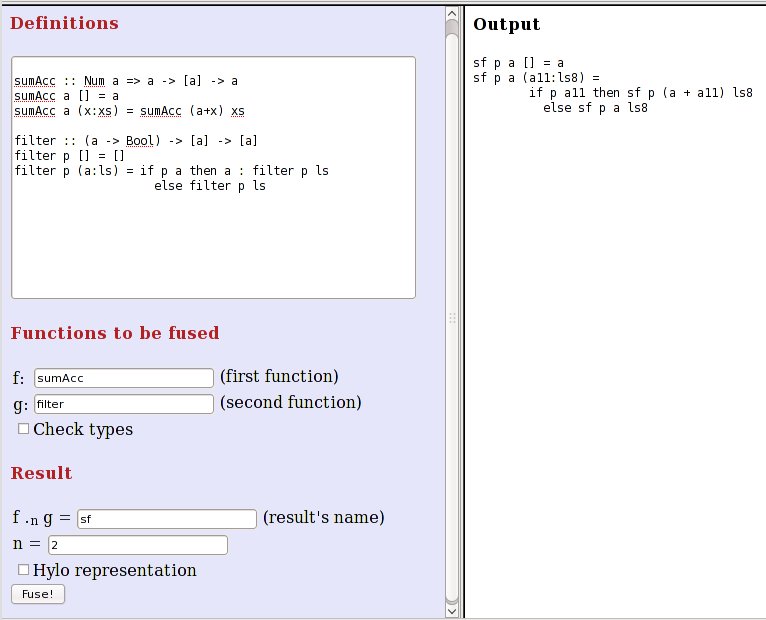

HFusion is an experimental tool for optimizing Haskell programs.

It is based on an algebraic approach where functions are internally represented

in terms of a recursive program scheme known as hylomorphism.

The tool performs source to source transformations by the application of a program

transformation technique called fusion. The aim of fusion is to reduce

memory management effort by eliminating the intermediate data structures produced in function compositions.

We offer a web interface to test the technique on user-supplied recursive definitions.

The user can ask HFusion to transform a composition of two functions into an equivalent

program which does not build the intermediate data structure involved in the composition.

In future developments of the tool we plan to find fusable compositions within programs automatically.

In its current state, HFusion is able to fuse compositions of general recursive functions,

including primitive recursive functions like dropWhile or factorial, functions that make

recursion over multiple arguments like zip, zipWith or equality predicates, mutually recursive

functions, and (with some limitations) functions with accumulators like foldl. In general,

HFusion is able to eliminate intermediate data structures of regular data types

(sum-of-product types plus different forms of generalized trees).

Further reading

- Documentation about the tool can be found in

HFusion home

- HFusion web interface is available from this

URL

Optimus Prime is project developing a supercompiler for programs written in F-lite, the subset of Haskell used by the Reduceron (→2.6). It draws heavily on Neil Mitchell’s work on the Supero supercompiler for YHC Core.

The project is still at the highly experimental stage but preliminary results are very encouraging. The process appears to produce largely deforested programs where higher-order functions have been specialized. This, as a consequence, appears to enable further gains from mechanisms such as speculative evaluation of primitive redexes on the Reduceron architecture.

Optimus Prime supercompilation has led to a 74%reduction in the number of Reduceron clock-cycles required to execute some micro-examples.

Work continues on improving the execution time of the supercompilation transformation and improving the performance of the supercompiled programs.

Contact

http://www.cs.york.ac.uk/people/?username=jason

Further reading

http://optimusprime.posterous.com/

The Derive tool is used to generate formulaic instances for data types. For example given

a data type, the Derive tool can generate 34 instances, including the standard ones

(Eq, Ord, Enum etc.) and others such as Binary and Functor. Derive can be used with SYB,

Template Haskell or as a standalone preprocessor. This tool serves a similar role to DrIFT,

but with additional features.

Recently Derive has had many derivations added, including new Uniplate (→5.8.1) instances. The

mechanism to derive instances by example has been rewritten, and the revised mechanism is

described in the associated Approaches and Applications of Inductive Programming 2009 paper.

Further reading

http://community.haskell.org/~ndm/derive/

The Agata library (Agata Generates Algebraic Types Automatically) is an outcome of my master’s thesis work at Chalmers University of Technology. The library uses Template Haskell to derive instances of the QuickCheck Arbitrary class for (almost) any Haskell data type.

The generators differ from regular QuickCheck generators in that they maintain scalability even for types analogous to nested collection data structures (e.g., [[[[a]]]], where the standard QuickCheck generator tends to generate values that contain millions of a’s). Generators also guarantee that independent components of the same type have the same expected size, e.g., in (a,[a]) the single a will have the same expected size as any a in the list.

Although a few additional features are to be implemented in the near future, efforts will be focused on documentation and improving performance. When the library is stable and well documented, the possibility of integrating it into the QuickCheck package may be explored.

Further reading

This tool by Ralf Hinze and Andres Löh

is a preprocessor that transforms literate Haskell code

into LaTeX documents. The output is highly customizable

by means of formatting directives that are interpreted

by lhs2TeX. Other directives allow the selective inclusion

of program fragments, so that multiple versions of a program

and/or document can be produced from a common source.

The input is parsed using a liberal parser that can interpret

many languages with a Haskell-like syntax, and does not restrict

the user to Haskell 98.

The program is stable and can take on large documents.

Since version 1.14, lhs2TeX has an experimental mode for

typesetting Agda code.

The current version is 1.15. Due to changes in the handling

of Unicode in ghc-6.12, this version should be built with ghc-6.10.

In the near future, version 1.16 will be released that is

hopefully behaving correctly when built with ghc-6.12.

Further reading

http://www.cs.uu.nl/~andres/lhs2tex

4.2 Analysis and Profiling

4.2.1 HTF: a test framework for Haskell

The Haskell Test Framework (HTF for short) lets you define unit

tests, QuickCheck properties, and black box tests in an easy and

convenient way. The HTF uses a custom preprocessor that collects test

definitions automatically. Furthermore, the preprocessor allows the HTF to

report failing test cases with exact file name and line number

information.

Initially created in 2005, HTF was not actively developed for almost five

years. Development resumed in 2010, adding many improvements to the code

base.

Further reading

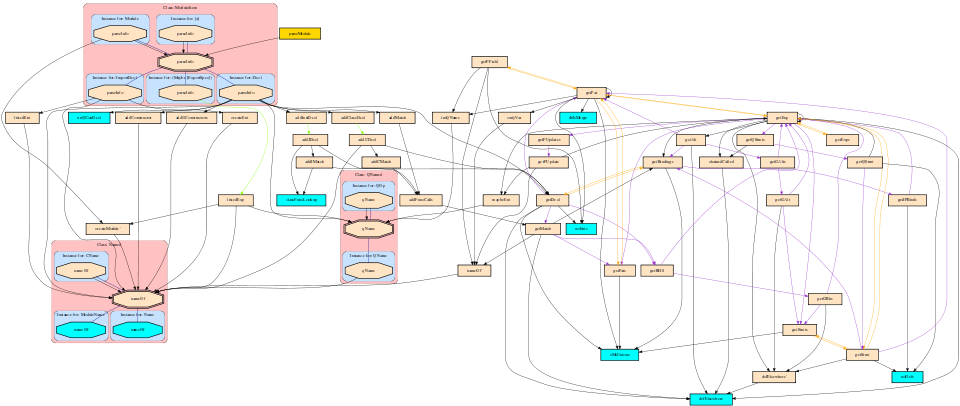

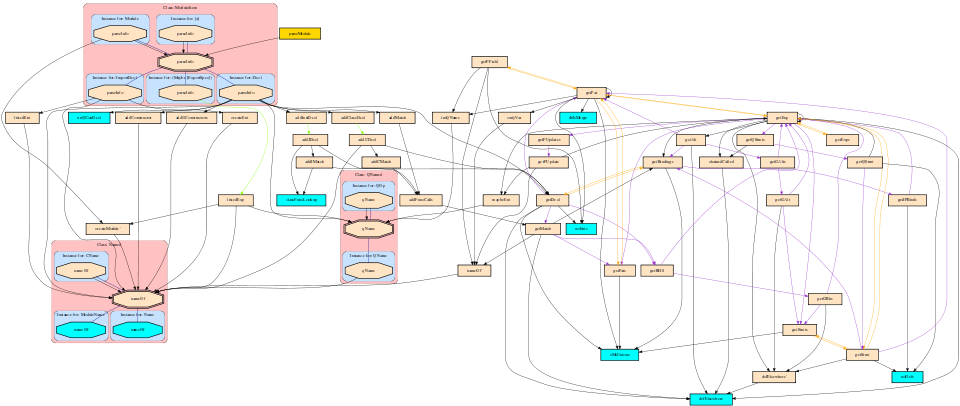

SourceGraph is a utility program aimed at helping Haskell programmers

visualize their code and perform simple graph-based analysis

(representing entities as nodes in the graphs and function calls as

directed edges), which started off as an

example of how to use the

Graphalyze

library (→5.7.1), which is designed as a general-purpose

graph-theoretic analysis library. These two pieces of software were

originally developed as the focus of my mathematical honors thesis,

“Graph-Theoretic Analysis of the Relationships Within Discrete

Data”.

Whilst fully usable, SourceGraph is currently limited in terms of

input and output. It analyses all .hs and .lhs

files recursively found in the provided directory, parsing most

aspects of Haskell code (cannot parse Haskell code using CPP, HaRP,

TH, FFI and XML-based Haskell code; difficulty parsing Data Family

instances, unknown modules and record puns and wildcards). The

results of the analysis are created in an Html file in a

“SourceGraph” subdirectory of the project’s root directory.

Various refinements have been implemented since the last release,

including:

- “Implicitly exported” entities (e.g., class method instance

definitions from external classes) are now supported; support for

these is not perfect and may include more entities than it should.

- Addition of depth analysis (based upon how many function calls

are needed from an exported entity).

- Better visualizations, including edge categorizations; the

generated Dot code is also saved if users wish to tweak these.

Current analysis algorithms utilized include: alternative module

groupings, whether a module should be split up, root analysis, depth

analysis, clique and cycle detection, as well as finding functions

which can safely be compressed down to a single function. Please note

however that SourceGraph is not a refactoring utility, and that

its analyses should be taken with a grain of salt: for example, it

might recommend that you split up a module, because there are several

distinct groupings of functions, when that module contains common

utility functions that are placed together to form a library module

(e.g., the Prelude).

Sample SourceGraph analysis reports can be found at

http://code.haskell.org/~ivanm/Sample_SourceGraph/SampleReports.html.

A tool paper on SourceGraph was presented at the ACM SIGPLAN 2010

Workshop on Partial Evaluation and Program Manipulation.

Further reading

HLint is a tool that reads Haskell code and suggests changes to make it simpler.

For example, if you call maybe foo id it will suggest using fromMaybe foo instead.

HLint is compatible with almost all Haskell extensions, and can be easily extended with additional hints.

There have been numerous feature improvements since the last HCAR. HLint supports Unicode and more fully

integrates with a C pre-processor. Many hints have been added, some of which were submitted by users. A

new mode has been added to hunt for suitable hints given source code. There have been substantial speed

improvements.

Further reading

http://community.haskell.org/~ndm/hlint/

4.2.4 A Haskell source file scanning tool

The Haskell source file scanning tool scan is supposed to be a

complement for hlint (→4.2.3). Whereas hlint makes suggestions to

improve your expressions, scan makes suggestions about your source

file format regarding white spaces, layout and comments, as usually

described by style guides.

The scan tool is also able to write back an untabified file without

trailing white space, with proper blanks around infix operators and after

commas, and with a single final newline.

I use this tool to keep my Haskell sources tidy and reduce mere white space

changes in evolving revisions. You are encouraged to do so, too.

Further reading

http://projects.haskell.org/style-scanner/

This project was born during the 2009 Google Summer of Code under the

name “Improving space profiling experience”. The name hp2any covers

a set of tools and libraries to deal with heap profiles of Haskell

programs. At the present moment, the project consists of three

packages:

- hp2any-core: a library offering functions to read heap

profiles during and after run, and to perform queries on them.

- hp2any-graph: an OpenGL-based live grapher that can

show the memory usage of local and remote processes (the latter

using a relay server included in the package), and a library

exposing the graphing functionality to other applications.

- hp2any-manager: a GTK application that can display

graphs of several heap profiles from earlier runs.

The project also aims at replacing hp2ps by reimplementing it in

Haskell and possibly adding new output formats. The manager

application shall be extended to display and compare the graphs in

more ways, to export them in other formats and also to support live

profiling right away instead of delegating that task to hp2any-graph.

Further reading

http://www.haskell.org/haskellwiki/Hp2any

4.3 Development

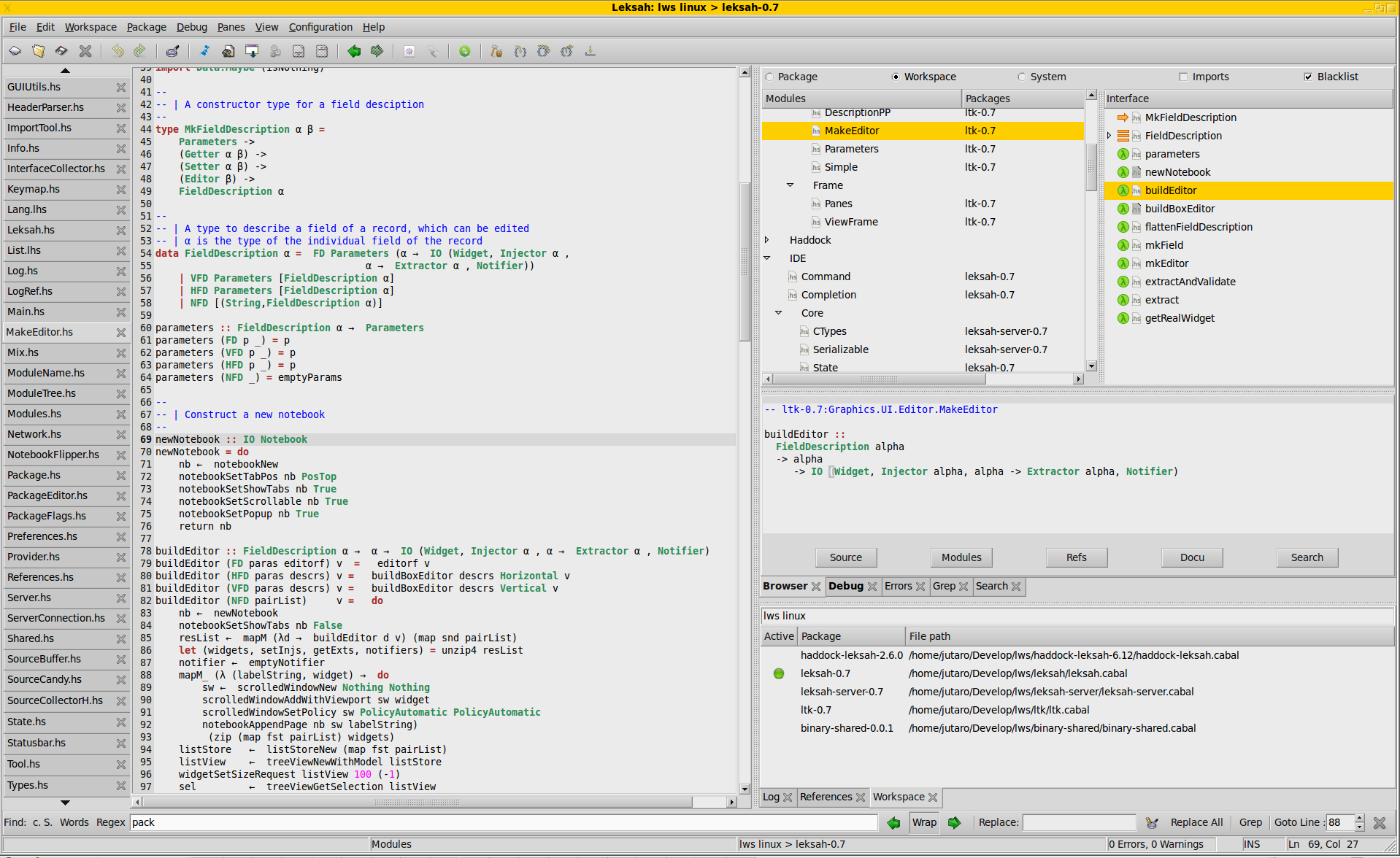

4.3.1 Leksah — Toward a Haskell IDE

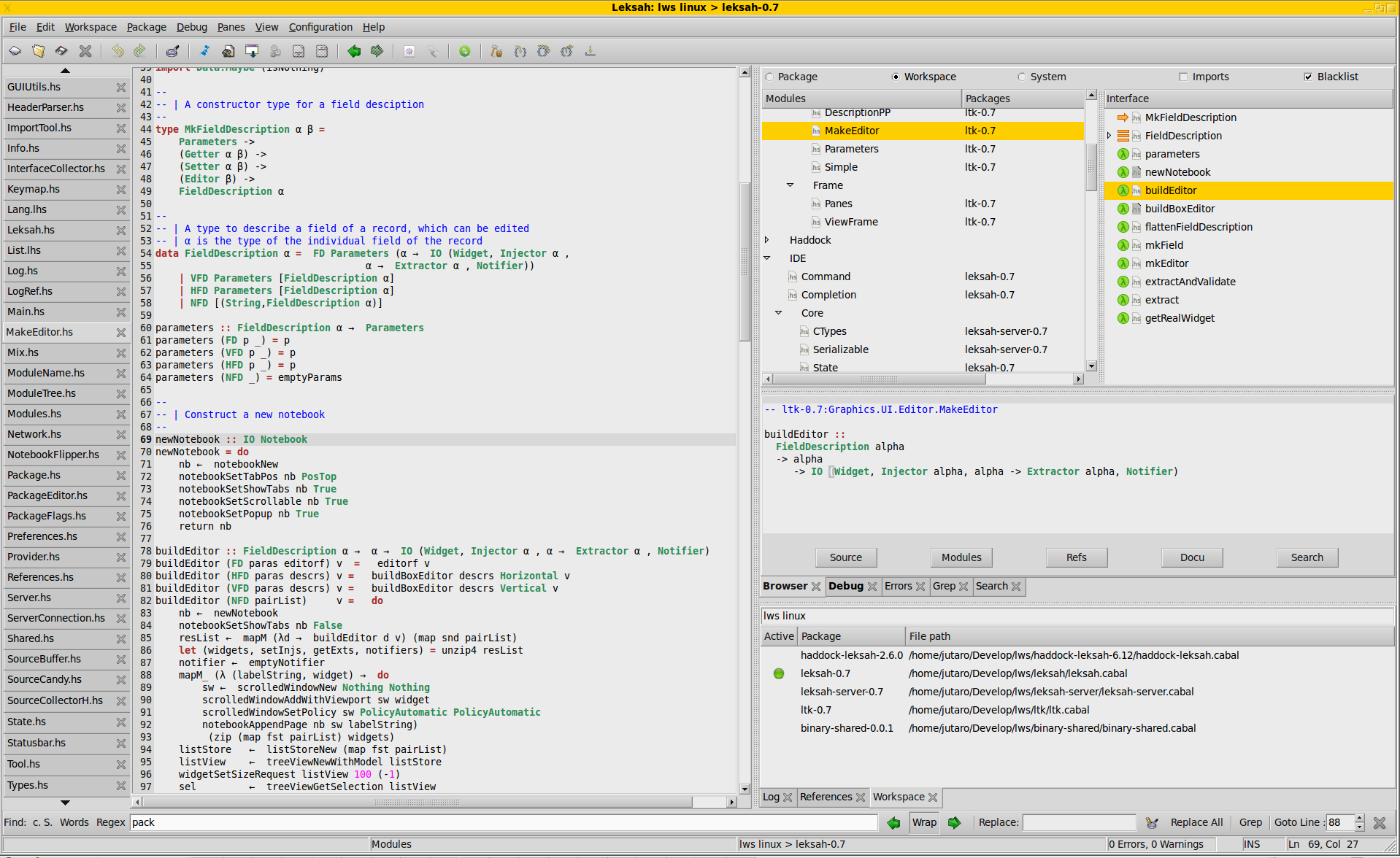

Leksah is a Haskell IDE written in Haskell, it uses Gtk+, and runs on

Linux, Windows, and Mac OS X. Leksah is intended to be a practical tool to

support the Haskell development process. Leksah is completely free.

Some features of Leksah:

- It uses the cabal package format and incorporates a cabal file

editor.

- It offers Workspaces for complex projects with multiple packages

with automatic build of dependencies.

- It contains a module browser that allows you to find type

information about all the functions/symbols available in the

packages installed on your system.

- For most packages it shows as well haddock style comments, and

gives direct navigation to sources.

- It integrates ghci debugging (including continuous

recompilation) that allows you to type check and evaluate

highlighted code snippets from within the editor itself.

Includes a scratch buffer for testing ideas.

- It includes a helper for automatic addition of import

statements.

- Offers a Haskell-customized editor with “source candy”.

- Multi-window support for a multi head setting.

- Many standard features of IDEs like: Jump to errors, Auto

Completion, Grep integration, …

- Configurable with session support, keymaps, and flexible

appearance.

Future plans

- Enhance usability and fix open bugs for the 1.0 release.

- Concept and implementation of an extension mechanism.

- Better integration of Yi as editor component.

The project needs more users and developers!

Further reading

http://leksah.org/

4.3.2 HEAT: The Haskell Educational Advancement Tool

Heat is an interactive development environment (IDE) for learning and teaching Haskell. Heat was designed for novice students learning Haskell. Heat provides a small number of supporting features and is easy to use. Heat is portable, small and works on top of Hugs.

Heat provides the following features:

- Editor for a single module with syntax-highlighting and matching brackets.

- Shows the status of compilation: non-compiled; compiled with or without error.

- Interpreter console that highlights the prompt and error messages.

- If compilation yields an error, then the source line is highlighted and additional error explanations are provided.

- Shows a program summary in a tree structure, giving definitions of types and types of functions &hellp;

- Automatic checking of all (Boolean) properties of a program; results shown in summary.

Over the summer 2009 Heat was completely re-engineered to provide a simple and cleaner internal structure for future development. This new version still misses a few features compared to the current 3.1 version. (Small) modifications for making Heat work with GHC instead of Hugs have also been submitted. A new release is still in the works.

Further reading

http://www.cs.kent.ac.uk/projects/heat/

4.3.3 HaRe — The Haskell Refactorer

Refactorings are source-to-source program transformations which change

program

structure and organization, but not program functionality. Documented in

catalogs and supported by tools, refactoring provides the means to adapt and

improve the design of existing code, and has thus enabled the trend towards

modern agile software development processes.

Our project, Refactoring Functional Programs, has as its major

goal to

build a tool to support refactorings in Haskell. The HaRe tool is now in its

fifth major release. HaRe supports full Haskell 98, and is integrated

with Emacs

(and XEmacs) and Vim. All the refactorings that HaRe supports, including

renaming, scope change, generalization and a number of others, are

module

aware, so that a change will be reflected in all the modules in a project,

rather than just in the module where the change is initiated. The system

also

contains a set of data-oriented refactorings which together transform a

concrete data type and associated uses of pattern matching into an

abstract type and calls to assorted functions. The latest snapshots

support the

hierarchical modules extension, but only small parts of the hierarchical

libraries, unfortunately.

In order to allow users to extend HaRe themselves,

HaRe includes an API for users to define their own program

transformations, together with Haddock documentation.

Please let us know if you are using the API.

Snapshots of HaRe are available from our webpage, as are related

presentations and

publications from the group (including LDTA’05, TFP’05, SCAM’06,

PEPM’08, PEPM’10, Huiqing’s PhD thesis and Chris’s PhD thesis).

The final report for the project appears there, too.

Chris Brown has presently passed his PhD; his PhD thesis entitled “Tool

Support for Refactoring Haskell Programs” is available from our

webpage.

Recent developments

- More structural and datatype-based refactorings have been

studied by Chris Brown, including transformation between

let and where, generative folding, introducing

pattern matching, and introducing case expressions;

- Clone detection and elimination support has been added, to

allow the automatic detection and semi-automatic elimination of

duplicated code in Haskell.

Further reading

http://www.cs.kent.ac.uk/projects/refactor-fp/

DarcsWatch is a tool to track the state of Darcs (→6.1.1) patches that have been

submitted to some project, usually by using the darcs send command. It

allows both submitters and project maintainers to get an overview of

patches that have been submitted but not yet applied.

The DarcsWatch service is moved to a new machine, urchin.earth.li, to

accommodate its growths.

DarcsWatch continues to be used by the xmonad project (→6.1.2), the Darcs

project itself, and a few developers.

At the time of writing, it was tracking 32 repositories and 2631 patches

submitted by 171 users.

Further reading

4.3.5 DPM — Darcs Patch Manager

The Darcs Patch Manager (DPM for short) is a tool that simplifies

working with the revision control system darcs (http://darcs.net). It is

most effective when used in an environment where developers do not push

their patches directly to the main repository but where patches undergo a

reviewing process before they are actually applied.

The current feature set of DPM is quite stable. In our company

(→7.5), we actively use DPM to keep track of all patches

sent to various projects. At the Haskell hackathon 2010 in Zürich, we

started working on support for tracking conflicts between patches. We did

not yet finish this work, but hope to provide a new DPM release with

support for conflicts in May 2010.

There is some overlap between DPM and darcswatch (→4.3.4). The

main difference between darcswatch and DPM is that the former mainly

targets developers whereas the latter helps reviewers doing their work.

Further reading

Haskell FFI Binding Modules Generator (HSFFIG) is a tool which parses

C include files (.h) and generates Haskell Foreign Functions Interface

import declarations for all functions, #define’d constants (where

possible), enumerations, and structures/unions (to access their

members). It is assumed that the GNU C Compiler and Preprocessor are

used. Auto-generated Haskell modules may be imported into applications

to access the foreign library’s functions and variables.

HSFFIG has been in development since 2005, and was recently released

on Hackage. The current version is 1.1.2 which is mainly a bug-fix

release for the version 1.1.

The package provides a small library to link with programs using

auto-generated imports, and two executable programs:

- hsffig: a filter program which reads pre-processed include files

from standard input, and produces one large .hsc file;

- ffipkg: a program which automates the process of building a Cabal

package out of C include files by the means of automated running

hsffig and other tools necessary to build a Haskell package.

Further reading

Hubris is an in-process bridge between Ruby and Haskell, allowing Ruby

programs to use Haskell code without writing boilerplate.

It is now easier to install, and some 64 bit bugs have been fixed.

To get it on Linux:

cabal install hubris

gem install hubris

Mac OS X is a bit harder because support for dynamic libraries has not

been merged into the GHC mainline yet, but it is in the pipe. Further

plans:

- work with new versions of Ruby without reinstallation of Hubris

Haskell-side support code

- support for passing RTS flags to the Haskell process

- translation instance injection (i.e., express equivalents for complex

Haskell datatypes in Ruby and vice versa)

- multiple argument support

- some way of storing non-translatable instances on the ruby side —

ideally, you should be able to have a Ruby list of Haskell functions,

and apply each of them in turn. Currently only translateable data

types are marshalled.